When I first heard about AI-powered glasses that could recognize faces and pull up personal info in seconds, it sounded like science fiction—until I realized, it’s not only real, it’s already been engineered by college students as a casual side project. I’ll never forget meeting a friend at a coffee shop, only to spot him quietly adjusting a nondescript pair of glasses. What happened next made me question if I was ever truly 'off the grid.' This post digs into the invention of I-XRAY, the new realities of wearable AI, and why it’s time we all get a little paranoid—in the best, self-protective way.

I-XRAY: The Birthday Party Demo that Became a Privacy Nightmare

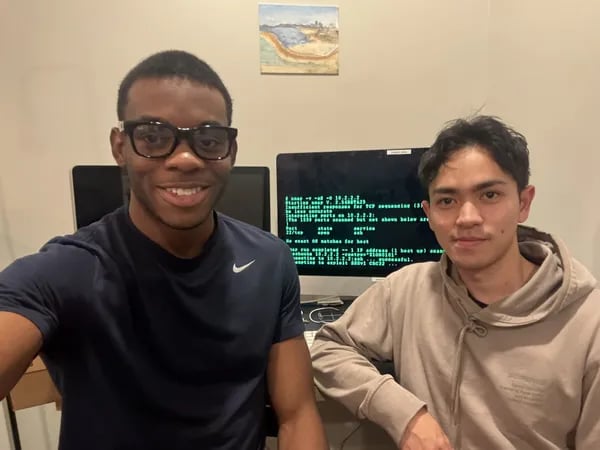

What started as a fun tech demo for a Harvard birthday party quickly evolved into one of the most disturbing demonstrations of modern privacy risks. Two Harvard students, AnhPhu Nguyen and Caine Ardayfio, created I-XRAY—a system that combines AI smart glasses with facial recognition software to expose strangers' personal information in real time.

"I started building this for a birthday party of mine," Nguyen recalls. "Like, we thought it'd be interesting as a demo." But as they showed their creation to more people, the implications became clear.

"We realized it was a huge security and privacy issue."

How I-XRAY Exposes Personal Information in Seconds

The technology behind I-XRAY reveals just how vulnerable our personal information exposure has become. Using nothing more than commercially available tools, the system can identify complete strangers and display their sensitive data through augmented reality overlays in under 60 seconds.

The process works frighteningly fast. Meta Ray-Ban glasses capture someone's face, then PimEyes—a reverse facial recognition software available to the public—scours the web for matching images. Once a match is found, FastPeopleSearch and similar data aggregation services supply names, addresses, phone numbers, and even partial social security numbers from publicly accessible records.

Large language models enhance the system's capabilities even further. As Nguyen explains, "once you have LLMs, you can not only get the name, but you can also search for that home address after you get the name, and LLMs also filter through … and logically figure out, oh, this person must be like around their 20s or 30s and 40s."

The Disturbing Accessibility of Surveillance Technology

What makes I-XRAY particularly alarming is how it demonstrates that wearable AI devices can now rival commercial surveillance tools in capability. The components are readily available: Meta Ray-Ban glasses retail for a few hundred dollars, PimEyes offers facial recognition searches to anyone with an internet connection, and FastPeopleSearch provides detailed personal records for as little as $30 per month.

Research shows that facial recognition combined with AR smart glasses can expose sensitive information in real time, often without meaningful notice or consent. The scary part, as the creators discovered, is that it's all automatic. Once these technologies are tied together, retrieving someone's personal information from just a photo becomes disturbingly easy.

This accessibility means that DIY projects now rival commercial surveillance tools in capability. Unlike professional surveillance systems that require significant resources and expertise, I-XRAY proves that almost anyone can assemble powerful privacy risks tools using consumer-grade technology.

Ethical Boundaries and Responsible Development

Despite the technical success of their project, Nguyen and Ardayfio made a crucial ethical decision. Rather than release I-XRAY to the public or commercialize it, they chose to demonstrate its capabilities as a warning about privacy vulnerabilities.

The more they tested their creation, the more they realized it wasn't just a fun project—it had real consequences. They decided to put "guardrails in place so that it's not…used unsafely." Instead of marketing the tool, they used it to raise awareness about how exposed our personal data has become.

Their approach highlights an important principle in responsible technology development. When creators recognize the potential for harm, they have a choice: exploit the technology for profit or use it to educate the public about emerging threats.

A Wake-Up Call for Privacy Protection

The I-XRAY project serves as more than just a technical demonstration—it's a wake-up call about the current state of digital privacy. The creators report that millions of people have seen their video, and thousands have hopefully taken action to clear their data and become more privacy conscious.

The technology pipeline they exposed isn't theoretical or futuristic. These tools exist today, and as Nguyen points out, "ClearView is a real company that does this exact thing." Commercial applications of similar technology are inevitable,

Data Collection Risks: How Wearable AI Puts You on Display

When I first learned about Harvard students' I-XRAY project, the reality hit me immediately. These aren't your average smart glasses—they're data collection powerhouses that can expose anyone's personal information within seconds. What started as a birthday party demo quickly revealed something far more troubling about the data collection risks we face every day.

The technology behind AI smart glasses goes far beyond simple photography. These devices secretly collect not just images but voice recordings, precise location data, and detailed behavioral patterns—all without giving you any obvious notice. Meta's Ray-Ban smart glasses, for instance, have minimal visible privacy indicators that are incredibly easy to miss in real-life situations. That tiny LED light? Most people wouldn't even notice it in daylight or crowded spaces.

The Hidden Data Pipeline

Here's what really concerns me about these data aggregation tools. The I-XRAY system demonstrates how seamlessly different technologies connect to create a complete privacy violation. Using reverse facial recognition engines like PimEyes, the glasses can scan your face and immediately search through countless online images. Within less than a minute, they can pull your name, occupation, home address, and even partial social security numbers.

Research shows that smart glasses can record data passively, even from bystanders who haven't consented to any data collection. You might be walking down the street, completely unaware that someone's glasses are cataloging your facial features and cross-referencing them with public databases.

The process is disturbingly automatic. As one of the I-XRAY creators explained, "once you have LLMs, you can not only get the name, but you can also search for that home address after you get the name." Large language models enhance the system's ability to logically connect data points, estimating ages and filtering through information to build comprehensive profiles of strangers.

Public Databases: Your Information on Display

What shocked me most is how public databases and data aggregation tools connect a face to surprising amounts of personal information. Services like FastPeopleSearch operate with minimal restrictions, especially in the United States. These platforms aggregate data from publicly accessible records, making it incredibly easy for AI systems to compile detailed personal profiles.

The scary part? Most people have no idea their information is even available through these channels. Many individuals discovered their details were publicly searchable only after seeing demonstrations of tools like I-XRAY. One creator noted receiving comments from "a lot of people [that] are quite surprised that they're just on this company's website where anyone can search this for free or for $30 bucks a month."

The Opt-Out Problem

This brings me to one of the most frustrating aspects of current privacy protections. Opting out of data aggregation services is often confusing, time-consuming, and sometimes even costly. The lack of robust opt-out options creates lasting privacy risks that most people can't effectively address.

"It shouldn't be so hard to protect your privacy," said Caine Ardayfio, one of I-XRAY's creators.

The regulatory landscape makes this problem worse. While Europe's GDPR restricts real-time facial recognition usage, the US allows services like FastPeopleSearch and PimEyes to operate with few constraints. This creates a concerning gap in privacy protections, especially when dealing with wearable AI devices that can process data instantly.

Minimal Safeguards, Maximum Exposure

Current privacy indicators on smart glasses are woefully inadequate. That small LED light on Meta Ray-Bans? It's designed more for legal compliance than actual privacy protection. In bright outdoor settings or busy environments, these indicators become practically invisible to bystanders.

The data collection is automatic and far-reaching, but meaningful user control is virtually absent. You can't opt out of being recorded by someone else's glasses, and you often can't even tell when it's happening. The privacy protections we desperately need simply don't exist in most jurisdictions.

Studies indicate that wearable AI devices act as persistent data gateways, increasing exposure to privacy violations beyond traditional digital platforms. Unlike smartphones, which require active use, smart glasses can continuously collect environmental data, including information about people who never consented to participate in any data collection

Beyond Science Fiction: Can We Really Control AI Surveillance in 2025?

The question isn't whether we can stop AI surveillance tools from advancing—it's whether we can shape how they're deployed. After witnessing the impact of I-XRAY's demonstration, I've come to understand that our response to these technologies will determine their ultimate effect on society.

The Reality Check: Commercial Adoption Is Already Here

What started as a Harvard dorm room project has exposed an uncomfortable truth about AI surveillance tools. While Nguyen and Ardayfio chose not to commercialize I-XRAY, others haven't shown such restraint. "ClearView is a real company that does this exact thing," Nguyen points out, referring to the facial recognition company that already operates at commercial scale.

This reality forces us to confront a critical fact: the technology behind I-XRAY isn't revolutionary—it's accessible. The creators estimate they've undertaken around 30 technical projects together, demonstrating just how easily innovative surveillance tools can be developed. When two college students can build something this powerful using existing technologies like Meta's Ray-Ban glasses and PimEyes, it becomes clear that commercial adoption is inevitable.

Privacy Protections Through Awareness and Action

The creators' decision to advocate for digital privacy protections rather than profit represents a turning point in how we approach AI surveillance. Their privacy awareness campaigns have reached millions, with the hope that thousands will take action to remove their data from online databases. But here's the frustrating reality: individual privacy actions are possible, yet rarely easy.

Research shows that opting out of data aggregation services involves navigating complex, deliberately frustrating processes. Ardayfio suggests that making big companies provide easy opt-out options should be a priority. It shouldn't be so hard to protect your privacy.

The ethical implications of current AI surveillance tools extend beyond mere inconvenience. We're dealing with lack of consent, personal data exposure, and significant risks of misuse for fraud and abuse. Digital privacy protections aren't just nice-to-have features—they're urgent necessities for maintaining basic human dignity in our increasingly connected world.

The Path Forward: Guardrails and Regulation

I-XRAY's creators now push for clearer guardrails around AI surveillance technology. Their approach focuses on three critical areas: clearer opt-out mechanisms, stronger regulation, and continued public awareness. This isn't about stopping innovation—it's about ensuring it serves humanity rather than exploiting it.

The European Union has already restricted real-time facial recognition in many contexts, while the United States lags behind in comprehensive digital privacy protections. This regulatory gap creates opportunities for companies to operate without meaningful oversight, making public advocacy even more crucial.

"At the end of the day, tech like this isn't going away," Nguyen acknowledges. The question becomes how we'll manage its integration into society.

Individual Agency in an Surveillance Age

Despite the challenges, individuals aren't powerless. The I-XRAY project has sparked conversations about personal data security that were previously relegated to privacy advocates and tech experts. Privacy awareness campaigns have made regular people aware that their information is searchable on websites "where anyone can search this for free or for 30 bucks a month."

Personal action still matters. People can remove their data from aggregation services, adjust privacy settings, and make informed choices about what information they share online. But these individual efforts need support from broader systemic changes.

Looking Ahead: The Control We Actually Have

Can we really control AI surveillance in 2025? The answer is nuanced. We can't stop technological advancement, but we can influence its direction. Commercial AI surveillance tools will proliferate—that's certain. However, awareness and policy pressure can catalyze meaningful change, even as tech's rapid evolution means privacy threats will persist.

Our first lines of defense remain education, regulatory advocacy, and personal action. The I-XRAY project serves as proof that when creators prioritize ethical implications over profit, they can shift entire conversations about technology's role in society.

The future of AI surveillance isn't predetermined. It will be shaped by the choices we make today—as individuals